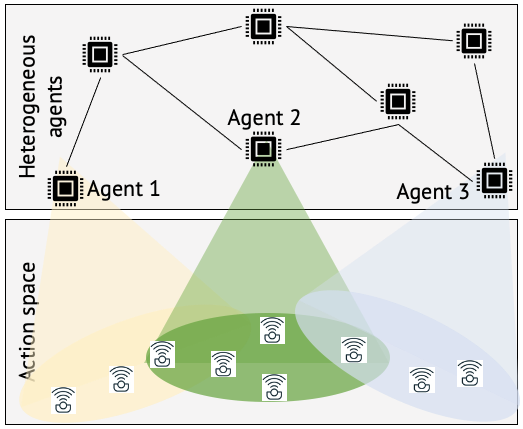

Future machine learning will become increasingly distributed because of the large-scale nature of learning tasks and the need to implement it across a heterogeneous set of edge devices. In this project, we explore the benefits of cooperation in enhancing the performance of integrated learning among multiple heterogeneous, asynchronous, and possibly corrupted edge nodes. This project develops robust algorithms for multi-agent learning in a cooperative scenario among multiple heterogeneous agents that might be corrupted.

In particular, we advance the foundations of the online learning framework as a well-established mathematical tool for decision-making under uncertainty and extend the online learning framework to a scenario where multiple heterogeneous agents, each representing a different node, cooperate to solve integrated learning tasks.

Our modeling captures the heterogeneity of agents in two ways:

- agents have heterogeneous access to the action space.

- agents are asynchronous with different computing capabilities.

The ultimate design goal is to find the best system-wide learning performance, distribute the cost of exploration fairly among agents, and achieve performance improvement with low communication overhead.